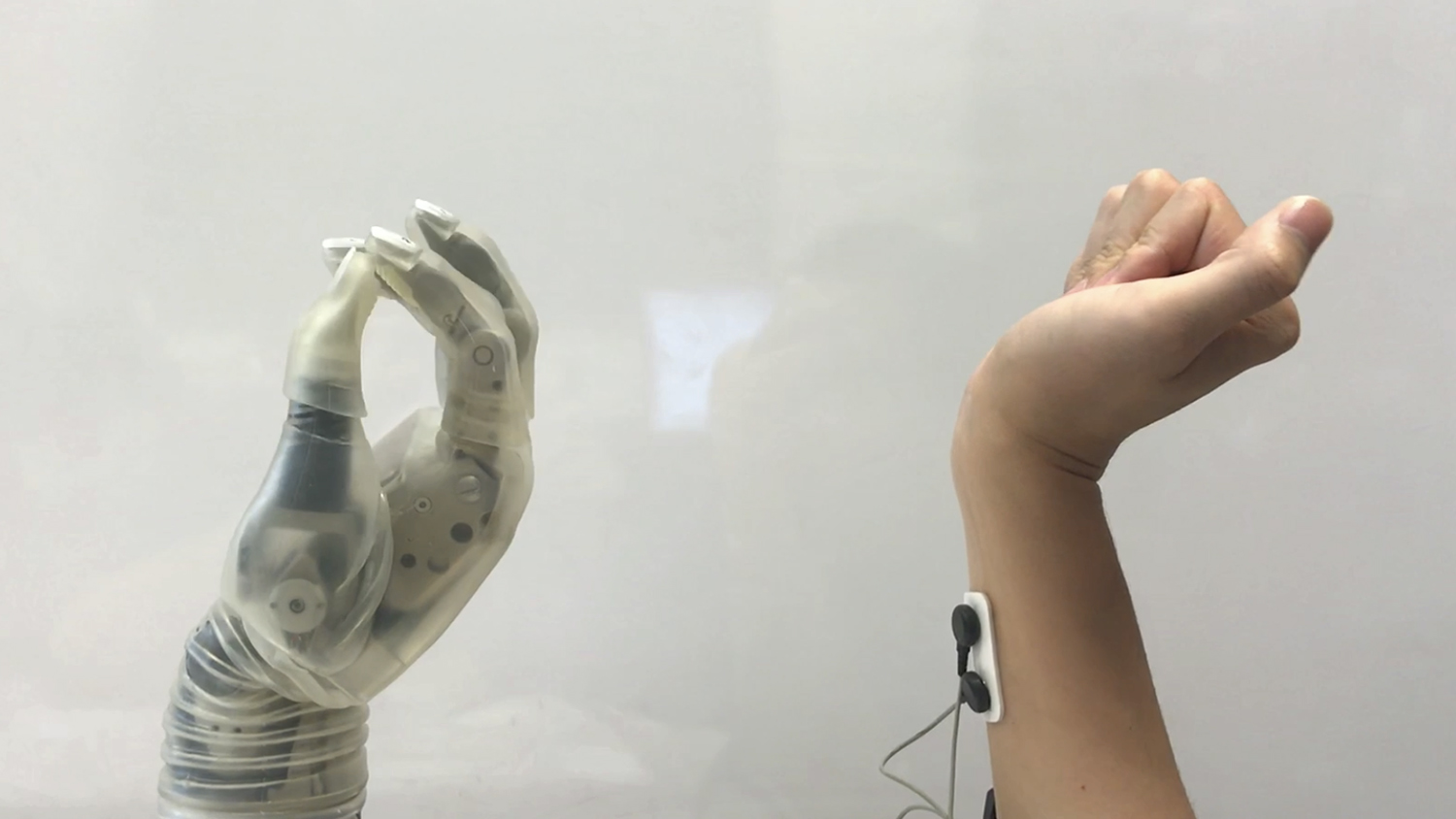

New tech may make prosthetic hands easier for patients to use

Researchers have developed new technology for decoding neuromuscular signals to control powered, prosthetic wrists and hands. The work relies on computer models that closely mimic the behavior of the natural structures in the forearm, wrist and hand. The technology could also be used to develop new computer interface devices for applications such as gaming and computer-aided design (CAD).

The technology, which has worked well in early testing but has not yet entered clinical trials, is being led by researchers in the UNC/NC State Joint Department of Biomedical Engineering (BME).

Current state-of-the-art prosthetics rely on machine learning to create a “pattern recognition” approach to prosthesis control. This new approach requires users to “teach” the device to recognize specific patterns of muscle activity and translate them into commands — such as opening or closing a prosthetic hand.

“Pattern recognition control requires patients to go through a lengthy process of training their prosthesis,” says Dr. He (Helen) Huang, a professor in BME and director of the Closed-Loop Engineering for Advanced Rehabilitation (CLEAR) core. “This process can be both tedious and time-consuming.

“We wanted to focus on what we already know about the human body,” says Huang, who is senior author of a paper on the work. “This is not only more intuitive for users, it is also more reliable and practical.

“That’s because every time you change your posture, your neuromuscular signals for generating the same hand/wrist motion change. So relying solely on machine learning means teaching the device to do the same thing multiple times; once for each different posture, once for when you are sweaty versus when you are not, and so on. Our approach bypasses most of that.”

Instead, the researchers developed a user-generic, musculoskeletal model. The researchers placed electromyography sensors on the forearms of six able-bodied volunteers, tracking exactly which neuromuscular signals were sent when they performed various actions with their wrists and hands. This data was then used to create the generic model, which translated those neuromuscular signals into commands that manipulate a powered prosthetic.

“When someone loses a hand, their brain is networked as if the hand is still there,” Huang says. “So, if someone wants to pick up a glass of water, the brain still sends those signals to the forearm. We use sensors to pick up those signals and then convey that data to a computer, where it is fed into a virtual musculoskeletal model. The model takes the place of the muscles, joints and bones, calculating the movements that would take place if the hand and wrist were still whole. It then conveys that data to the prosthetic wrist and hand, which perform the relevant movements in a coordinated way and in real time — more closely resembling fluid, natural motion.”

Lead author of the paper is Dr. Lizhi Pan, a postdoctoral researcher in Huang’s lab.

Return to contents or download the Fall/Winter 2018 NC State Engineering magazine (PDF, 3MB).

- Categories: